What is a Data Lake in AWS?

An AWS data lake is a centralized store for holding structured, semi-structured, and unstructured data at scale. Unlike conventional data warehouses, which need the schema to be defined beforehand, data lakes in AWS enable companies to accumulate raw data and then transform it for analysis afterwards. Amazon S3, AWS Glue, and AWS Lake Formation are the services that are at the core of an AWS data lake architecture, enabling the storage, security, cataloging, and analysis of data cost-effectively.

Why Data Lake Best Practices Matter

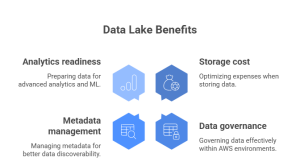

Numerous IT executives spend money on cloud-based data storage systems and then discover later that their data lakes are not performing well. Absent defined practices, such repositories can soon become “data swamps”—disorganized, insecure, and hard to query. Best practices guarantee:

- Cost efficiency in data storage

- Effective data governance in AWS environments

- Recurring metadata management for discoverability

- Readiness for advanced analytics and machine learning

For companies like yours, embracing best practices early on saves long-term operating overhead and optimizes the return on data investment.

Key AWS Data Lake Best Practices

Optimize Data Ingestion and Storage

Data comes in various forms—logs, IoT streams, transactional data, or files. Make pipelines ingest data easier by using Amazon Kinesis or AWS Glue. For storage, the most dependable choice is still Amazon S3. Divide buckets based on data sensitivity and lifecycle policies to prevent unwanted storage costs. Compression and partitioning also make queries faster.

Ensure Data Governance and Security

Security is not a choice—it’s core. AWS Lake Formation provides fine-grained access to guard sensitive data. Enterprises must combine role-based access with AWS IAM and use encryption at rest (KMS) and in transit (TLS). Use governance policies aligned with compliance standards applicable to your business. Strong governance also serves to uphold trust when scaling cloud data storage solutions across departments.

Implement Metadata and Cataloging

A metadata-less lake is equivalent to a library without a catalog. Employ the AWS Glue Data Catalog for metadata management in the center. Correctly tagging, schema evolution, and data lineage tracking guarantee that analysts and engineers are able to discover and utilize data successfully. This is a key step in preventing duplication and wasted effort.

Leverage Scalability and Automation

AWS data lake architecture is designed to scale, but automation ensures efficiency remains. Policies for lifecycle management, auto-detection of schema, and serverless ETL jobs conserve engineering bandwidth. Companies can depend on AWS Lambda for event-driven processing, keeping data pipelines fast as volumes increase.

Enable Advanced Analytics and Machine Learning

Once your data lake is optimized, connect it to tools like Amazon Athena, Redshift Spectrum, or SageMaker. This enables real-time queries, predictive models, and machine learning at scale. With a strong foundation in place, IT teams can shift from simply storing data to generating insights that directly support decision-making.

Common Mistakes to Avoid in AWS Data Lakes

- Treating the data lake as a dumping ground without a clear strategy

- Skipping metadata management in AWS, so data is all but impossible to locate later

- Ignoring governance, creating security holes

- Not matching cost reduction with business expansion

- Applying a one-size-fits-all schema to varied data sets

Avoiding these mistakes keeps your AWS environment business-ready, agile, and secure.

Future Trends in AWS Data Lake Management

As of 2025, cataloging and self-service governance through AI are becoming the norm. Cloud data storage solutions will be seamlessly integrated with compliance monitoring tools. Multi-cloud data lakes—where AWS blends well with Azure or GCP environments—are another trend. Companies are also relying on generative AI for auto-documentation and anomaly detection across data pipelines. These trends are shaping how businesses design and manage data lakes in AWS for scalability and compliance.

How Data Engineering Services Improve AWS Data Lakes

While AWS provides solid tools, success frequently relies on skilled implementation. Having a data engineering team as a partner guarantees:

- Personalized AWS data lake architecture focused on business objectives

- Optimized ingestion pipelines for structured and unstructured data according to best practices

- Hands-on assistance with data governance in AWS for compliance-intensive sectors

- Monitoring on an ongoing basis to sustain performance as data increases

At Techmango, we partner with companies like yours to develop data lakes from simple repositories into scalable insight-driven systems.

Conclusion: Building Data Lakes with AWS

Constructing and governing a successful AWS data lake isn’t merely a matter of technology—it’s a matter of discipline, governance, and foresight. By adhering to best practices and steering clear of typical errors, organizations can set their data assets free to deliver full value.

If you want to optimize or create a data lake from the ground up, Techmango’s data engineering services can assist you every step of the way—from architecture design to integration with advanced analytics.

Let’s create smarter, more secure data lakes that expand with your business.

Frequently Asked Questions

An AWS Data Lake is a centralized cloud repository that stores all types of data—structured and unstructured—at scale. For UAE enterprises, it enables cost-efficient data management, real-time analytics, and AI-driven insights, supporting digital transformation across industries like real estate, logistics, and finance.

Without proper best practices, data lakes can become disorganized and insecure. Implementing governance, metadata management, and cost optimization helps UAE organizations maintain compliance with data protection laws, enhance efficiency, and maximize ROI from their cloud investments in AWS.

UAE businesses can secure their AWS Data Lakes using AWS Lake Formation, IAM policies, and encryption (KMS/TLS). Implementing governance frameworks ensures compliance with UAE data privacy standards, protecting sensitive business and customer data while maintaining trust and operational integrity.

Key mistakes include storing unorganized data without a plan, ignoring metadata management, skipping governance, and over-spending on unused storage. Avoiding these pitfalls ensures UAE enterprises maintain efficient, scalable, and compliant data environments aligned with their growth strategies.

Techmango delivers enterprise-grade AWS Data Lake architecture, governance, and integration tailored for UAE businesses. With expertise in cloud engineering and data analytics, Techmango ensures scalability, cost efficiency, and compliance—helping organizations turn their data into actionable intelligence for digital transformation.