In 2025, the average price of a data breach was over $4.88m (IBM Security Report). Almost 60% of companies had at least one attack with AI help in the last year, and worldwide, networks had a 35% rise in zero-day bugs.

As the threat world moves faster, Generative AI is becoming a way for businesses to get ahead—getting in front of hackers rather than after they strike.

Microsoft’s Security Copilot has helped pros in real-time threat hunts, and Google Cloud has Sec-PaLM 2, which makes it possible to react to threats with context—Generative AI is now helping to boost the next step in cyber defense, bringing smarts, automation, and flexibility to all parts of the shield.

At Techmango, our Generative AI Services help teams change cybersecurity into a leap forward, data-based skill that makes resilience stronger and work more secure.

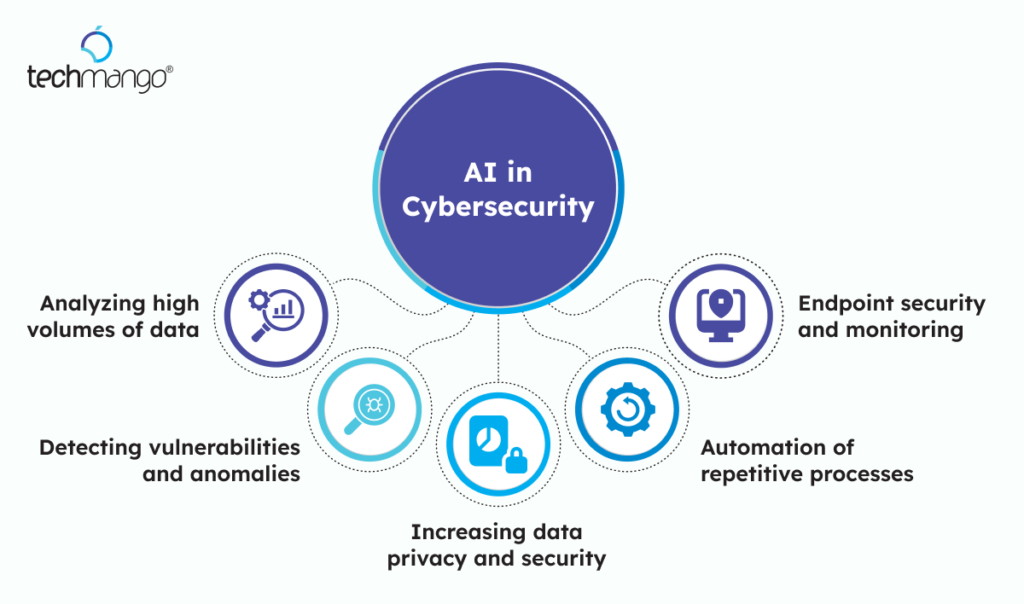

AI in Cybersecurity

What Is Generative AI’s Role in Cybersecurity Today?

What is Generative AI use in cybersecurity?

Generative AI gives modern cybersecurity systems some smarts and wiggle room. It can mimic attacks, see new patterns, and make response systems better all over the company.

Use cases include:

- Making fake phishing and ransomware campaigns to get ready against attacks

- Finding strange network things and strange behavior

- Making safe fake data so AI models can learn

- Helping pros with AI-made copilots and info systems

How Generative AI helps make sense of big piles of security data

Modern companies deal with huge amounts of data from logs, devices, and sensors every day. Generative AI models, especially LLMs that are just right for cybersecurity, can help to understand these signals in real-time—finding patterns that regular systems miss.

For example, CrowdStrike uses AI to cut down how long threat detection takes, and Darktrace applies Generative AI to learn about a company’s digital footprint. This lets the system find strange things more accurately.

Important Use Cases: How Generative AI Improves Security Operations

Finding vulnerabilities and strange things

Generative AI can find code vulnerabilities and wrong setups before they happen. Techmango’s AI-enabled vulnerability detection system works with DevSecOps pipelines, so it finds problems early, up to 40% faster than regular scans.

Fake data for safety and model learning

Training models with real-world data can be a privacy issue. Generative AI makes fake data that looks real and safe for privacy.

How can fake data help cybersecurity?

Fake data lets companies imitate and test defense systems for many attack types, and fake data won’t hurt real data. One Techmango client used fake data to test over 10,000 attack scenarios when going over rules, which made them ready but still followed the rules.

Making threat hunts, incident responses, and endpoints automatic

Generative AI makes threat hunts on its own and incident triage faster. With SOAR systems, Techmango’s GenAI systems have cut down the time to find threats by over half, which speeds up response and containment all across hybrid systems.

Related Blogs

Game-changing and significant GenAI trends that you need to know

Problems & Risks with Using Generative AI in Security Contexts

What are the problems with using Generative AI in cybersecurity?

While GenAI helps defense, it can also be used wrongly to make bad phishing content, deepfakes, or take advantage of model flaws. Rules must make sure the model is used and checked safely.

Bias, explainability, and interpretability problems

AI-based security systems need to be open. Black-box models can make it hard to explain actions during audits or rules checks. Explainable AI makes sure each output can be followed and checked.

Data privacy, compliance, and model security

Companies need to follow strict data rules—encrypting training data, limiting access, and noticing model changes. Techmango makes sure every use of AI is with rules that follow GDPR, ISO 27001, and HIPAA.

Ways & rules to Use Generative AI safely

Getting models right and adjusting to your threat world

Safety problems depend on industry and what tech is used. Adjusting AI models with organization-specific data makes sure they are correct, relevant, and catch threats that are specific to your business faster.

How do you reduce bias and privacy risks in Generative AI?

Set strict data filters, adversarial checks, and differential privacy rules. Ongoing retraining prevents models from getting stuck with old or biased threat types.

Use RAG, knowledge graphs, and agentic workflows

Retrieval-Augmented Generation (RAG) and knowledge graphs use verifiable, organization-owned data for AI to use. Agentic workflows automate cross-system checks—linking event detection, risk scoring, and response.

Keeping people involved & constant review

Generative AI works with pros, it doesn’t replace them. Techmango’s human-in-loop rules make sure each AI step can be reviewed, explained, improved, and checked with real data.

Related Blogs

The impact of Generative AI in app modernization that businesses need to know in 2025

Why Techmango Is Your Partner for GenAI-Driven Cybersecurity

Techmango provides Generative AI cybersecurity solutions with predictive models, smart automation, and explainable AI.

Our skills include:

- AI-based threat analysis with company SIEM systems

- Fake safe data and test platforms

- RAG-based decision intelligence for context

- Self-controlled AI agents for daily security and incident response

- Full rules for GDPR, SOC 2, and ISO rules

With over 35 enterprise AI projects and more than 60 AI engineers and MLOps pros, Techmango has helped companies secure digital systems at scale.

For example, one international producer used our AI anomaly detection system to reduce false alerts by 45% and save over $1.2 million in financial risks in six months.

Wrap Up

Cyber protection is going through a stage where flexibility beats strength. Generative AI gives companies the data intelligence and automation they need to stay strong against the fast-changing threats of today.

For today’s CEOs, CTOs, and heads of security, success depends on using AI in the right way and with a plan.

With Techmango’s Generative AI Services, companies can turn cybersecurity into a smart, getting-better-by-itself protector that keeps data safe, keeps rules in line, and keeps a company’s digital health strong.

The integration of Generative AI into cybersecurity is a game-changer. At Exiga Software Services, we strongly believe that combining AI’s speed, accuracy, and scalability with modern security strategies is key to staying ahead of evolving threats. Leveraging tools like LLMs, RAG, and knowledge graphs not only enhances threat detection but also strengthens overall data resilience. A must-read for organizations looking to future-proof their cybersecurity frameworks.