Data engineering now serves as the important layer between raw data and organizational decision-making, combining to provide architecture, pipelines, and governance to deliver information at scale. As companies continue to digitize their operations, data engineering offerings are the backbone for analytics, automation, and AI. Success in data engineering can resemble operational resilience, enhanced agility, and tangible business results.

Why Modern Businesses Rely on Data Engineering Services

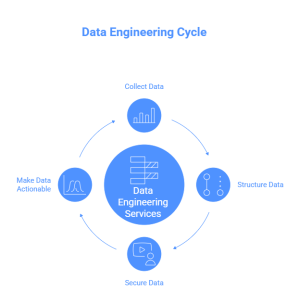

Enterprise data environments are increasingly complex. Streams of data are flowing from a variety of sources including ERP systems, IoT sensors, financial transactions between buyers and sellers, and customer interactions before, during, and after a digital transaction. Without engineering practices and good structured data, those data inputs will continue to be siloed, inconsistent, and unusable. Data engineering services exist to overcome this complexity by creating end-to-end ecosystems that allow for data to be available, secure, and actionable.

Recent studies highlight the scale of this shift:

- 78% of enterprises report challenges with siloed data.

- Over 60% are adopting real-time analytics by 2025.

- 72% cite regulatory compliance as the primary driver of governance initiatives.

These figures underline a common reality: organizations no longer have the luxury of viewing data engineering as an optional business function – it is absolutely a prerequisite for sustainable growth and innovation.

Core Data Engineering Approaches Driving High-Impact Results

Building Robust Data Pipelines

Modern pipelines are no longer linear workflows, but a dynamic ecosystem. Pipelines need to support both streaming and batch processing, fail resiliently, and deliver usable data with minimal latency. Newer concepts like Data Mesh further decentralize ownership of pipelines by giving domain teams the ability to define and manage their own flows. Observability layers provide visibility for engineers when they need to understand a bottleneck or anomaly in real-time.

Leveraging Cloud Data Platforms

The cloud has transformed scalability in data engineering. Organizations now can more easily utilize the elasticity of architectures (like Snowflake, AWS Redshift, Azure Synapse, and Google BigQuery) which can scale from terabytes to petabytes. Multi-cloud adoption is rapidly accelerating, and the vast majority (92%) of enterprises now have workloads across hybrid environments. Integration in a cloud-native way using AI services allows enterprises to run more advanced models where the data lives, minimizing latency and reducing operational overhead.

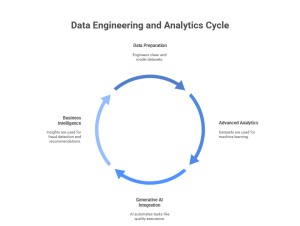

Advanced Analytics & Machine Learning Integration

Data engineering cannot exist without advanced analytics. Engineers prepare, clean, and model datasets for machine learning and predictive model creation. Generative AI has sped up engineering tasks like data quality assurance, anomaly reporting, and pipeline optimization. Repetitive tasks can now be fully automated which shortens development timeframes while supporting business intelligence use cases around fraud detection, personalized recommendations, and smart supply chain management.

Best Practices for Achieving High-Impact Results

Creating Data Quality and Governance

High-impact results depend on trust. Organizations are implementing governance frameworks, metadata catalogs, and lineage tracking into their architecture. Data Fabric architectures are becoming more common, working governance and quality management into distributed workflows. It provides compliance along with transparency in an increasingly complex data ecosystem.

Automating Workflows for Efficiency

Automation is now considered an engineering standard. Orchestration/automation frameworks such as Apache Airflow, Prefect, and Dagster work to automate transformations, scheduling, and recovery regarding workflows/pipelines. Pipelines can be self-healing, running failed jobs without user intervention, while policy-based automation guarantees compliance rules are being applied consistently. Faster delivery process while reducing operational risk.

Scaling Data Solutions with AI & Cloud

Scalability is through the combination of serverless architectures and AI-driven optimization. Cloud platforms will allocate necessary computing resources based on demand automatically while AI models will continuously optimize workloads regarding performance and cost. This combination provides the necessary functions to process millions of transactions with efficiency in real-time in more distributed environments.

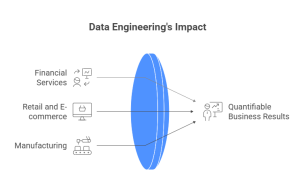

Real-World Impact of Data Engineering Services

There is demonstrable value from engineering excellence across a wide variety of industries:

Healthcare: Data Fabric architectures have brought together electronic health records and IoT initiatives to provide AI-enabled diagnostics.

Financial Services: Data streaming pipelines are able to offer real-time data for fraud detection and algorithmic trading at a worldwide scale.

Retail and e-commerce: Data Mesh models help individual business units to create local analytics while balancing with enterprise principles.

Manufacturing: Predictive analytics can reduce downtime by predicting failures in equipment and keeping down millions of dollars of maintenance costs annually.

Each of these examples above shows how proven engineering not only improves operations but instills quantifiable results to the business.

Choosing the Right Data Engineering Partner

Choosing a partner encompasses much more than technical expertise. Companies should assess data engineering service providers using the following criteria:

- Depth in modern frameworks (e.g., Spark, Kafka, Snowflake, dbt).

- Experience in Data Mesh and Fabric implementations.

- Observable monitoring abilities for complicated ecosystems.

- Compliant with regulations depending on the industry.

- Ability to scale from pilot projects to enterprise deployment.

The best partners work as strategic advisors, ensuring that the architectures are technically sound and will enable the client’s long-term goals.

Conclusion: Transform Data into High-Impact Business Value

Achieving excellence in data engineering is a disciplined combination of pipelines, governance, cloud-native platforms and advanced analytics. Data Mesh, Data Fabric, AI observability and automation-first pipelines present engineering practices at an accelerating pace to meet 2025 enterprise standards.

Data engineering services have moved away from strictly back-office functions. Data engineering capabilities have developed into strategic enablers enabling organizations to turn disparate information into measurable business value. Organizations employing structured engineering practices instill resilience, agility and insight necessary to pragmatically engage and compete at scale.

At Techmango, our data engineering competency is based on our proven principle of realizing measurable impact. Our team utilizes advanced frameworks, cloud-native platforms, and AI enablement to deliver cost-effective solutions that are robust to accommodate longer-term scalable benefits. Embracing a holistic view of engineering practices with a business strategy enables Techmango to help enterprises transform raw data into actionable intelligence, which allows the enterprise to make quicker decisions, achieve better compliance, and position themselves to maintain long-term growth.

Frequently Asked Questions

High-impact data engineering means designing systems that drive real business value—resilient analytics, faster decision-making, AI enablement, and cost-effective operations. It’s not just about storing data, but turning raw inputs into trusted insights at scale.

Techmango focuses on:

Building robust, resilient ingestion and pipeline architectures (streaming + batch)

Leveraging cloud-native platforms (Snowflake, BigQuery, Redshift, etc.) for scalability

Embedding governance, data quality, and lineage from the start

Automating workflows and transformations using orchestration tools

Integrating analytics & ML to make data “action-ready”

Governance and quality are foundational. Without trust in the data (accuracy, lineage, compliance), insights are unreliable. Techmango embeds metadata cataloging, lineage tracking, role-based access controls, and quality checks into pipelines so stakeholders can trust analytics & AI models.

Data engineering prepares, cleanses, and shapes data into feature-ready formats. It ensures that ML pipelines operate at scale and integrates with ML tools like TensorFlow, PyTorch, or MLflow. This infrastructure enables use cases like predictive modeling, anomaly detection, personalization, and real-time analytics.

With well-executed engineering, organizations often see:

Faster, more trustworthy analytics

Reduced latency between data capture and decision

Better compliance and risk management

More efficient operations (e.g. predictive maintenance, fraud detection)

Scalability for growth without breaking systems

A very insightful blog! Data engineering truly forms the backbone of digital transformation. The emphasis on modern techniques like data lakehouse architecture and machine learning integration shows how crucial it is to adopt agile, scalable, and secure data practices. Exiga Software Services recognizes the power of data-driven decisions and continues to innovate in this space.