Data is the building block of all modern enterprises. For organizations that serve multiple industries, the speed and effectiveness of those business outcomes are determined by the speed and effectiveness of capturing, processing and building insights from data. Scalable data engineering is one of the core components of this transformation, allowing businesses to become more resilient, agile in their analytics and more empowered in their decision-making at all levels.

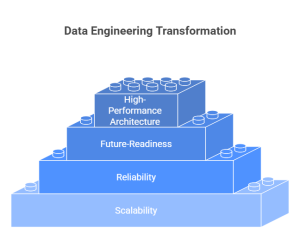

Techmango built its data engineering services with scalability, reliability and future-readiness at its core. By leveraging established frameworks along with advanced capabilities, companies are able to move from disparate data systems into a single high-performance architecture that is suitable for immediate ambitions and for future growth.

Building Robust Data Ingestion Pipelines

Data enters organizations from many different systems and devices, including applications, cloud systems, IoT devices, and external sources. If organizations do not have a solid ingestion strategy, they will often lose out on valuable insights or insights will be delayed. Techmango’s approach focuses on real-time ingest and batch ingest pipelines developed for ingesting high-volume and high-velocity data.

With recent technologies such as Apache Kafka, AWS Kinesis, and Azure Event Hubs, Techmango develops systems that allow data to continuously flow from its design systems into centralized repositories. This results in little to no latency, and a single source of truth that supports an organization’s analytics, reporting, and operational efficiencies.

Seamless Data Integration Across Systems

Fragmented Sources of Data often create inconsistent reports and siloed insights. Techmango provides advanced integration strategies that help unify data across ERP, CRM and custom business systems. Techmango deploys integration frameworks like Apache NiFi, Informatica, and dbt and can connect all cloud and on-premise data environments to improve your data’s availability, consistency, and usability when it is required for advanced analytics.

Scalable Data Storage Architectures

As enterprises generate exponential volumes of information, scalable storage solutions become essential. Traditional systems often struggle with performance bottlenecks, security gaps, and rising costs.

Techmango designs cloud-first storage architectures using platforms such as Amazon S3, Google BigQuery, Snowflake, and Azure Data Lake. These architectures are built to handle petabyte-scale workloads, maintain security and compliance, and optimize cost-efficiency. With this foundation, enterprises can confidently expand their data footprint while maintaining operational agility.

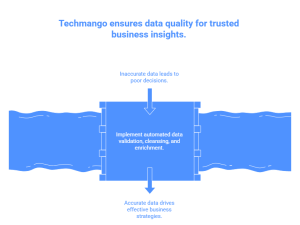

Elevating Data Quality for Trusted Insights

Data-driven decisions are based on the quality of the data being used, whether the quality is high, low, or incomplete, the result will be to operate at a level that will result in an operational inefficiency and/or undesirable business strategic decisions. Techmango embeds data quality management within the engineering to enable business unit functions to provide trusted insight. Techmango has operationalized, and automated validation, automated cleansing, and automated enrichment processes to ensure data quality for all business units, operationalized* both data, and workflows, so that the datasets are accurate and complete. As we orient our quality framework back to the business KPIs any enterprise will trust the analytics model, the AI model, and the business strategic decision that sits behind that data and insight.

Advanced Data Processing and Transformation

Raw data delivers little value without effective processing. Enterprises require platforms that can transform information into formats optimized for analysis and machine learning.

Techmango builds advanced data transformation pipelines using Spark, Databricks, and Python-based frameworks. These systems enable scalable processing of structured, semi-structured, and unstructured data, turning complexity into actionable intelligence. This capability supports use cases ranging from predictive analytics to personalized customer experiences.

Strengthening Data Governance and Compliance

Global businesses will always have to deal with the complex web of regulatory and compliance issues around privacy and security. Techmango builds governance into each data engineering engagement so the business can maximize value from their data assets, while also ensuring compliant behavior and maintaining compliance vigilance.

The implementation of lineage tracking, management of metadata, and application of role-based access controls provides business to organization trust in their data systems. Anywhere from healthcare to finance to manufacturing – organizations will have governance processes based on changing compliance standards.

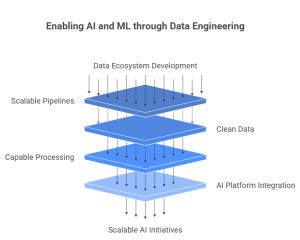

Enabling AI and Machine Learning at Scale

Data engineering is essential to enabling organizations to adopt AI and ML. Without scalable pipelines, clean data, and capable processing, enterprises cannot operationalize their AI strategies.

Techmango enables advanced analytics and ML workloads by building data ecosystems for organizations. They build ecosystems that allow enterprises to operationalize their capacity for AI initiatives through integration with AI & ML platforms like TensorFlow, PyTorch, or MLflow. This provides organizations the ability to deploy scalable AI initiatives within their operational boundary, accelerating time-to-value for predictive modeling, automation, and real-time analytics.

Real-World Business Applications

Techmango’s scalable data engineering model has enabled organizations across industries to:

- Retail: Provide consumer analytics of their customers in real time to drive personalized customer engagement strategies.

- Healthcare: Create unified systems from siloed patient records to deliver better care.

- Finance: Implement performance-enhanced processing pipelines to increase fraud monitoring.

- Manufacturing: Utilize IoT data for predictive maintenance while driving agility.

These examples represent how scalable data engineering produces quantifiable impact for organizations operating in complex, fast-moving environments.

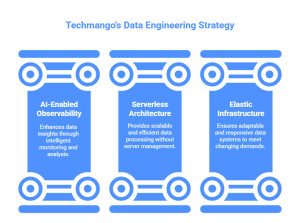

Preparing for the Future of Data Engineering

The future of data engineering will rely on automation, real time intelligence, and sustainable architectures. Techmango will prepare enterprises for the future by leveraging AI-enabled observability, serverless architecture, and elastic infrastructure.

Techmango is constantly evolving its data engineering services to provide enterprises with scalability, performance, and resilience for evolving business needs. Our approach is centered on more than just the technology systems being built; we want organizations to create sustained business value from their information assets.

Conclusion

Scalable data engineering has become a matter of necessity for enterprises who want to enhance their digital core. Techmango enables enterprises to prepare for the future of data with engineering capabilities across ingestion, integration, storage, quality, governance and advanced processing.

Scalable data engineering provides enterprises with the ability to meet strategic objectives more efficiently and drive accelerated analytics leading to wiser decision-making. For executives challenged with shaping the next period of growth, partnering with Techmango is an impactful first step to becoming a resilient data-powered enterprise.

Frequently Asked Questions

Scalable data engineering refers to building data systems that can efficiently handle growing data volumes, diverse sources, and complex processing needs. It ensures that as data grows, performance, reliability, and insights remain consistent across business functions.

Techmango designs real-time and batch ingestion pipelines using technologies like Apache Kafka, AWS Kinesis, and Azure Event Hubs. These enable continuous data flow from multiple systems into centralized repositories, ensuring low latency and high reliability for analytics and reporting.

Techmango integrates scalability, quality, and governance into every stage—covering ingestion, integration, transformation, storage, and observability. With automation, cloud-native frameworks, and compliance controls, we ensure performance and resilience across industries.

By building clean, high-quality, and structured datasets, Techmango enables organizations to operationalize AI and ML models effectively. Integration with frameworks like TensorFlow, PyTorch, and MLflow supports predictive modeling, automation, and real-time analytics at scale.

Organizations can achieve faster analytics, improved decision-making, better customer experiences, real-time insights, enhanced compliance, and reduced operational costs. Industries like retail, healthcare, finance, and manufacturing have already realized measurable business value from this approach.

This blog highlights how data engineering has become the backbone of intelligent decision making and operational efficiency. From real time insights to predictive analytics, the power of well designed data pipelines is undeniable. At Exiga Software Services, we help businesses turn raw data into valuable assets by delivering scalable, reliable, and future ready data engineering solutions that truly align with strategic goals.